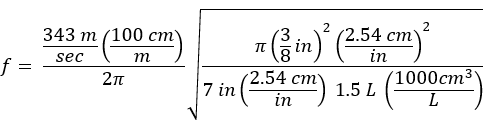

Richard Askey came up with the following formula for Fibonacci numbers:

Here φ is the golden ratio, (1 + √5)/2.

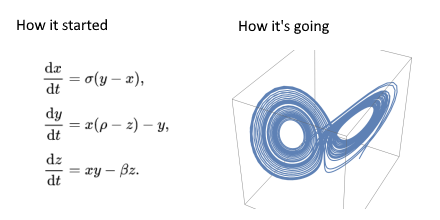

We’ll use this formula as the jumping off point to discuss the implications of how equations are written, complex logarithms, and floating point computing in Python.

Reading forward and backward

Of course every equation of the form a = b can be rewritten b = a. The two forms of the equation have the same denotation but different connotations. Equations have an implied direction of application. When you see an equation written as a = b, it’s often the case that the equation is usually applied by substituting b for a.

For example, take the equation A = πr². The most natural application of this equation is that you can compute the area of a circle of radius r by squaring r and multiplying by π. It’s less common to see something like 9π and think “Oh, that’s the area of a circle of radius 3.”

Note also that this is also how nearly every programming language works: a = b means update the variable a to the value b</code.

In writing Askey’s formula as above, I’m implying that it might be useful to express the mth Fibonacci number in terms of hyperbolic sine evaluated at complex arguments. Why in the world would you want to do that? The Fibonacci numbers are elementary and concrete, but logs and hyperbolic functions are not so much, especially with complex arguments. Askey’s formula is interesting, and that would be enough, but it could be useful if some things are easier to prove using the formulation on the right side. See, for example, [1].

If I had written the formula above as

the implication would be that the complicated expression on the left can be reduced to the simple expression on the right. It would be gratifying if some application lead naturally to the formulation on the left, but that seems highly unlikely.

I’ll close out this section with two more remarks about the direction of reading equations. First, it is a common pattern in proofs to begin by applying an equation left-to-right and to conclude by applying the same equation right-to-left. Second, it’s often a clever technique to applying an equation in the opposite of the usual order. [2]

Branch cuts

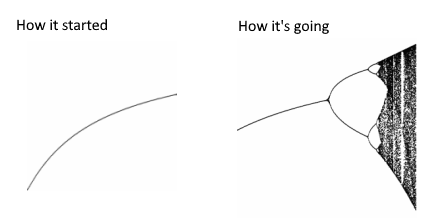

What does it mean to take the logarithm of a complex number? Well, the same as it does to take the logarithm of any number: invert the exp function. That is, log(x) = y means that y is a number such that exp(y) = x. Except that it’s not quite that simple. Notice the indefinite article: “a number such that …”. For positive real x, there is a unique real number y such that exp(y) = x. But there are infinitely many complex solutions y, even if x is real: for any integer n, exp(y + 2πni) = exp(y).

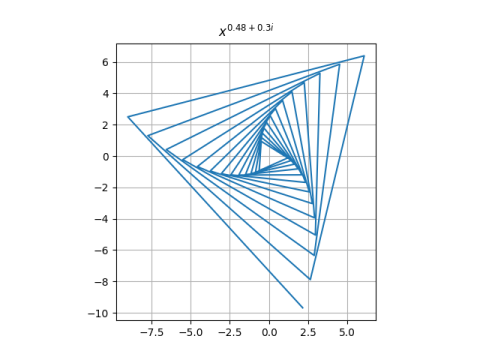

When we extend log to complex arguments, we usually want to do so in such a way that we keep familiar logs the same. We want to extend the logarithm function from the positive real axis into more of the complex plane. We can’t extend it continuously to the entire complex plane. We have to exclude some path from the origin out to infinity, and this path is known as a branch cut.

The conventional choice for log is to cut out the negative real axis. That’s what NumPy does.

Python implementation

Let’s see whether Askey’s formula works when coded up in Python.

from numpy import sinh, log

def f(m):

phi = (1 + 5**0.5)/2

return 2*sinh(m*log(phi*1j))/(5**0.5*(1j)**m)

Note that Python uses j for the imaginary unit rather than i. And you can’t just use j in the code above; you have to use 1j. That let’s Python use j as an ordinary variable when it’s not part of a complex number.

When we evaluate f(1), we expect 1, the first Fibonacci number. Instead, we get

(1-2.7383934913210137e-17j)

Although this is surprising at first glance, the imaginary part is tiny. Floating point numbers in Python have about 16 significant figures (more details here) and so the imaginary part is as close to zero as we can expect for any floating point calculation.

Here are the first five Fibonacci numbers using the code above.

(1-2.7383934913210137e-17j)

(1.0000000000000002-1.6430360947926083e-16j)

(2-3.2860721895852156e-16j)

(3.0000000000000013-7.667501775698841e-16j)

(5.0000000000000036-1.5061164202265582e-15j)

If you took the real part and rounded to the nearest integer, you’d have yet another program to compute Fibonacci numbers, albeit an inefficient one.

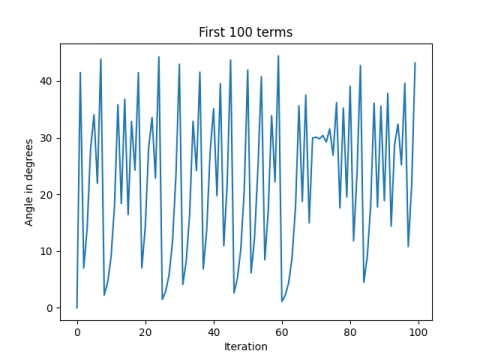

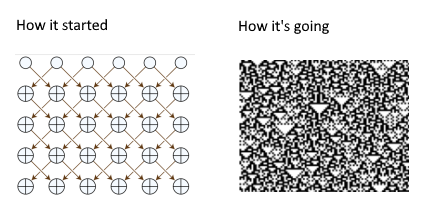

Just out of curiosity, let’s see how far we could use this formula before rounding error makes it incorrect.

import functools

@functools.lru_cache()

def fib0(m):

if m == 1 or m == 2:

return 1

else:

return fib0(m-1) + fib0(m-2)

def fib1(m):

return round(f(m).real)

for m in range(1, 70):

a, b = fib0(m), fib1(m)

if a != b:

print(f"m: {m}, Exact: {a}, f(m): {f(m)}")

The lru_cache decorator adds memoization to our recursive Fibonacci generator. It caches computed values behind the scenes so that the code does not evaluate over and over again with the same arguments. Without it, the function starts to bog down for values of m in the 30’s. With it, the time required to execute the code isn’t noticeable.

The code above shows that fib1 falls down when m = 69.

m: 69, Exact: 117669030460994

f(m): (117669030460994.7-0.28813316817427764j)

Related posts

[1] Thomas Osler and Adam Hilburn. An Unusual Proof That Fm Divides Fmn Using Hyperbolic Functions. The Mathematical Gazette, Vol. 91, No. 522 (Nov., 2007), pp. 510-512.

[2] OK, a third comment. You might see equations written in different directions according to the context. For example, in a calculus book you’d see

1/(1-x) = 1 + x + x² + x³ + …

but in a book on generating functions you’re more likely to see

1 + x + x² + x³ + … = 1/(1-x)

because calculus problems start with functions and compute power series, but generating function applications create power series and then manipulate their sums. For another example, differential equation texts start with a differential equation and compute functions that satisfy the equation. Books on special functions might start with a function and then present a differential equation that the function satisfies because the latter form makes it easier to prove certain things.