This post muses about what it means to learn a software library. I’ll use Pandas as an example, but the post isn’t just about Pandas.

Suppose you say “I want to learn Pandas.” That implicitly assumes Pandas one thing, and in a sense it is. In another sense Pandas is hundreds of things.

At the top level, the pandas module (version 1.2.0) has 142 things inside.

>>> import pandas as pd

>>> len(dir(pd))

142

The two most important things inside are the Series and DataFrame objects. They each in turn contain hundreds of things.

>>> len(dir(pd.Series))

434

>>> len(dir(pd.DataFrame))

441

That’s evidence Pandas’ diversity. But here’s evidence of it’s unity: most of the things inside these two objects have the same names.

>>> s = set(dir(pd.Series))

>>> d = set(dir(pd.DataFrame))

>>> len(s.union(d))

491

>>> len(s - d)

50

>>> len(d - s)

57

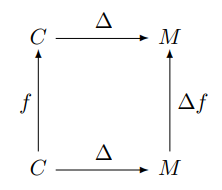

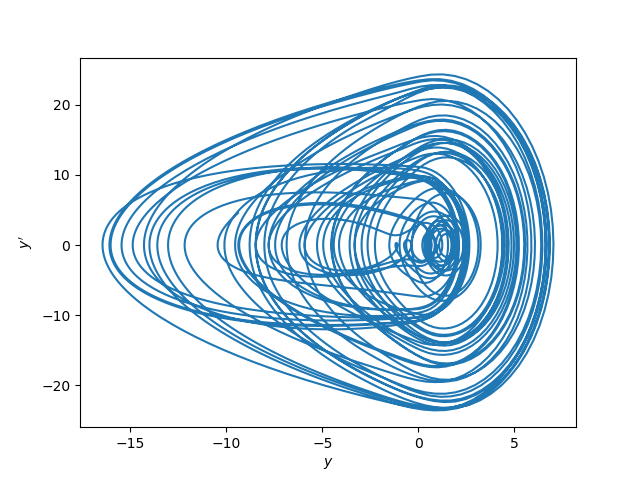

Pandas kinda has a fractal dimension, having both complexity and unity. The best way to think about it is not as one monolithic thing, or as hundreds of isolated things. It’s a coherent, but not perfectly coherent, collection of related things. This is true of all software libraries. Pandas is more coherent than most libraries because it was initially the product of one mind, that of Wes McKinney.

This has a couple implications for what it means to “learn Pandas.” Because Pandas is big, you have to explore it strategically, not exhaustively. And because Pandas is coherent, part of what it means to learn Pandas is to develop a feel for the way Pandas does things.

No one is going to learn Pandas by studying every object, every method on every object, and every argument to every method on every object. It’s too big. That’s also unnecessary.

There’s probably something like a Pareto distribution on the usefulness of features. The most commonly used features are used far, far more often than the most obscure features.

It would be interesting to do some kind of survey to see which features are actually used and how often. But I don’t think that’s practical. The easiest thing to do would be to find some large code base that heavily uses Pandas. But that’s not typical of how Pandas is used. Probably most lines of code using Pandas are scattered over millions of small scripts, much of it not in production code.

A well-designed library makes it possible to make good guesses about functionality you haven’t used. You learn the gestalt of the library. You can always look up API documentation as needed, but you can’t develop an intuition for a library just-in-time.

“Learn Pandas” is a daunting goal, and maybe an impossible goal if by “learn” you mean explore exhaustively. But “learn how to do my common tasks quickly in Pandas” and “develop a feel for how to do things in Pandas” are much smaller tasks.