I was looking up the entropy of a Student t distribution and something didn’t seem right, so I wanted to look at familiar special cases.

The Student t distribution with ν degrees of freedom has two important special cases: ν = 1 and ν = ∞. When ν = 1 we get the Cauchy distribution, and in the limit as ν → ∞ we get the normal distribution. The expression for entropy is simple in these two special cases, but it’s not at all obvious that the general expression at ν = 1 and ν = ∞ gives the entropy for the Cauchy and normal distributions.

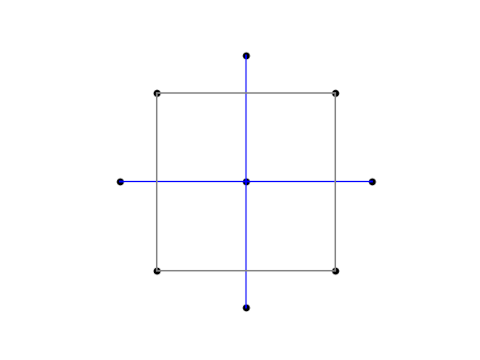

The entropy of a Cauchy random variable (with scale 1) is

and the entropy of a normal random variable (with scale 1) is

The entropy of a Student t random variable with ν degrees of freedom is

Here ψ is the digamma function, the derivative of the log of the gamma function, and B is the beta function. These two functions are implemented as psi and beta in Python, and PolyGamma and Beta in Mathematica. Equation for entropy found on Wikipedia.

This post will show numerically and analytically that the general expression does have the right special cases. As a bonus, we’ll prove an asymptotic formula for the entropy along the way.

Numerical evaluation

Numerical evaluation shows that the entropy expression with ν = 1 does give the entropy for a Cauchy random variable.

from numpy import pi, log, sqrt

from scipy.special import psi, beta

def t_entropy(nu):

S = 0.5*(nu + 1)*(psi(0.5*(nu+1)) - psi(0.5*nu))

S += log(sqrt(nu)*beta(0.5*nu, 0.5))

return S

cauchy_entropy = log(4*pi)

print(t_entropy(1) - cauchy_entropy)

This prints 0.

Experiments with large values of ν show that the entropy for large ν is approaching the entropy for a normal distribution. In fact, it seems the difference between the entropy for a t distribution with ν degrees of freedom and the entropy of a standard normal distribution is asymptotic to 1/ν.

normal_entropy = 0.5*(log(2*pi) + 1)

for i in range(5):

print(t_entropy(10**i)- normal_entropy)

This prints

1.112085713764618

0.10232395977100861

0.010024832113557203

0.0010002498337291499

0.00010000250146458001

Analytical evaluation

There are tidy expressions for the ψ function at a few special arguments, including 1 and 1/2. And the beta function has a special value at (1/2, 1/2).

We have ψ(1) = −γ and ψ(1/2) = −2 log 2 − γ where γ is the Euler–Mascheroni constant. So the first half of the expression for the entropy of a t distribution with 1 degree of freedom reduces to 2 log 2. Also, B(1/2, 1/2) = π. Adding these together we get 2 log 2 + log π which is the same as log 4π.

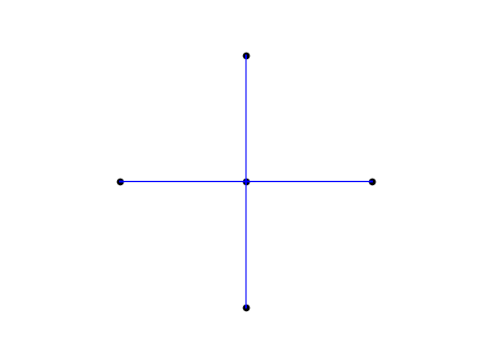

For large z, we have the asymptotic series

See, for example, A&S 6.3.18. We’ll also need the well-known fact that log(1 + z) ∼ z. for small z,

Next we use the definition of the beta function as a ratio of gamma functions, the fact that Γ(1/2) = √π, and the asymptotic formula here to find that

This shows that the entropy of a Student t random variable with ν degrees of freedom is asymptotically

for large ν. This shows that we do indeed get the entropy of a normal random variable in the limit, and that the difference between the Student t and normal entropies is asymptotically 1/ν, proving the conjecture inspired by the numerical experiment above.