Newton’s method for solving f(x) = 0 converges quickly once it starts to converge. Specifically, it converges quadratically: the error is roughly squared at each step. This means that the number of correct decimal places doubles at each iteration of Newton’s method.

Doubling the number of decimal places is nice, but what if we could triple the number of correct decimals at each step? Edmond Halley, best known for the comet that bears his name, came up with a way to do just that. Newton’s method converges quadratically, but Halley’s method converges cubically.

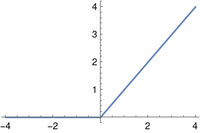

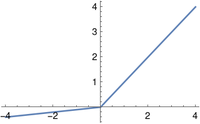

Newton’s method updates iterations using the rule

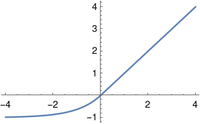

Halley’s variation uses the rule

Halley’s method makes more progress per iteration, but it also takes more work per iteration, and so in practice it’s not usually an improvement over Newton’s method. But in some cases it could be.

Evaluating efficiency

In root-finding problems, the function f is usually relatively time-consuming to evaluate. We can assume nearly all the work in root-finding goes into evaluating f and its derivatives, and we can ignore the rest of the arithmetic in the method.

Newton’s method requires evaluating the function f and its derivative f ′. Halley’s method requires these function evaluates as well and also requires evaluating the second derivative f ′′.

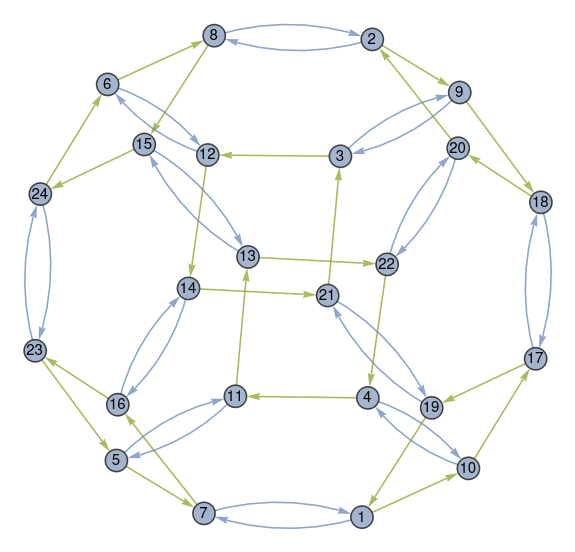

Three iterations of Newton’s method make as much progress toward finding a root as two iterations of Halley’s method. But since Newton’s method requires two function evaluations and Halley’s method requires three, both require six function evaluations to make the same amount of progress. So in general Halley’s method is not an improvement over Newton’s method.

When Halley might be better

Suppose we had a way to quickly compute the second derivative of f at a point from the values of f and its first derivative at that point, rather than by having to evaluate the second derivative explicitly. In that case two iterations of Halley’s method might make as much progress as three iterations of Newton’s method but with less effort, four function evaluations rather than six.

Many of the functions that are used most in applied mathematics satisfy 2nd order differential equations, and so it is common to be able to compute the second derivative from the function and its derivative.

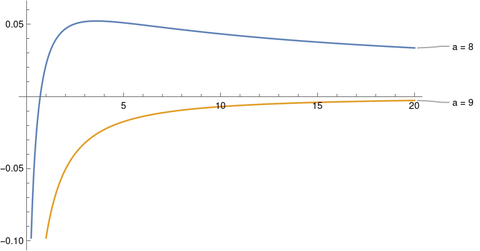

For example, suppose we want to find the zeros of Bessel functions Jν. Bessel functions satisfy Bessel’s differential equation; that’s where Bessel functions get their name.

This means that once we have evaluated Jν and its first derivative, we can compute the second derivative from these values as follows.

Maybe we’re not working with a well-studied function like a Bessel function but rather we are numerically solving a differential equation of our own creation and we want to know where the solution is zero. Halley’s method would be a natural fit because our numerical method will give us the values of y and y ′ at each step.