If you look at the Wikipedia article on Weierstrass functions, you’ll find a line that says “the relation between the sigma, zeta, and ℘ functions is analogous to that between the sine, cotangent, and squared cosecant functions.” This post unpacks that sentence.

Weierstrass p function

First of all, what is ℘? It’s the Weierstrass elliptic function, which is the mother of all elliptic functions in some sense. All other elliptic functions can be constructed from this function and its derivatives. As for the symbol itself, ℘ is the traditional symbol for the Weierstrass function. It’s U+2118 in Unicode, ℘ in HTML, and \wp in LaTeX.

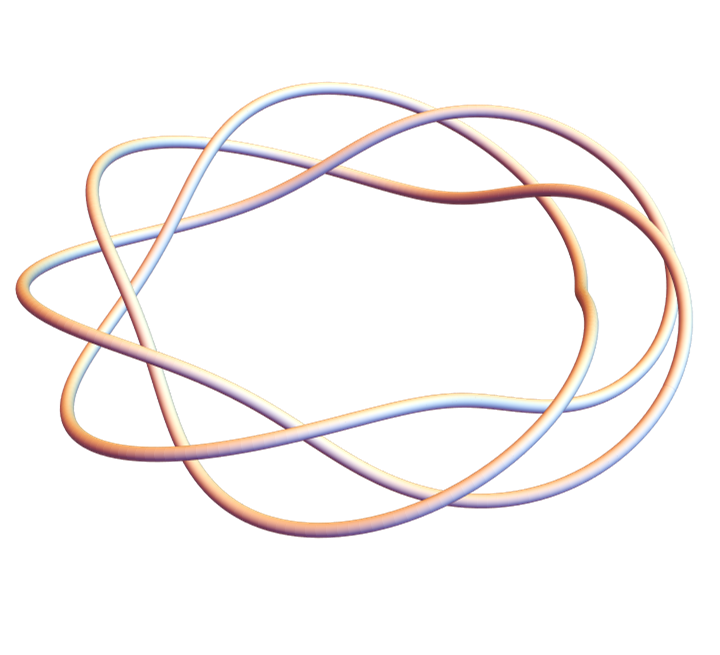

The line above suggests that ℘(x) is analogous to csc²(x). Indeed, the plots of the two functions are nearly identical.

Here’s Weierstrass’ function:

And here’s csc²:

The two plots basically agree to within the thickness of a line.

The Weierstrass function ℘ has two parameters that I haven’t mentioned. Elliptic functions are periodic in two directions in the complex plane, and so their values everywhere are determined by their values over a parallelogram. The two parameters specify the fundamental parallelogram, or at least that’s one way of parametrizing the function. The WeierstrassP function in Mathematica takes two other parameters, called the invariants, and these invariants were chosen in the plot above to match the period of the cosecant function.

Plot[Sin[x]^-2, {x, -10, 10}]

Plot[WeierstrassP[

x,

WeierstrassInvariants[{Pi/2, Pi I/2}]

],

{x, -10, 10}

]

The fundamental parallelogram is defined in terms of half periods, usually denoted with ω’s, and the invariants are denoted with g‘s. The function WeierstrassInvariants converts from half periods to invariants, from ω’s to g‘s.

Note that ℘ and cosecant squared are similar along the real axis, but they’re very different in the full complex plane. Trig functions are periodic along the real axis but grow exponentially along the complex axis. Elliptic functions are periodic along both axes.

Weierstrass zeta function

The Weierstrass zeta function is not an elliptic function. It is not periodic but rather quasiperiodic. The derivative of the Weierstrass zeta function is negative of the Weierstrass elliptic function, i.e.

ζ ‘(x) = -℘(x)

which is analogous to the fact that the derivative of cot(x) is -csc²(x). So in that sense ζ is to ℘ as cotangent is to cosecant squared.

The plots of ζ(x) and cot(x) are similar as shown below.

The Mathematica code to make the plot above was

Plot[

{WeierstrassZeta[x, WeierstrassInvariants[{Pi/2, Pi I/2}]],

Cot[x]},

{x, -10, 10},

PlotLabels -> {"zeta", "cot"}

]

Weierstrass sigma function

The Weierstrass sigma function is also not an elliptic function. It is analogous to the sine function as follows. The logarithmic derivative of the Weierstrass sigma function is the Weierstrass zeta function, just as the logarithmic derivative of sine is cotangent. That is,

(log(σ(x))’ = ζ(x).

The logarithmic derivative of a function is the derivative of its log, and so the logarithmic derivative of a function f is f ‘ / f.

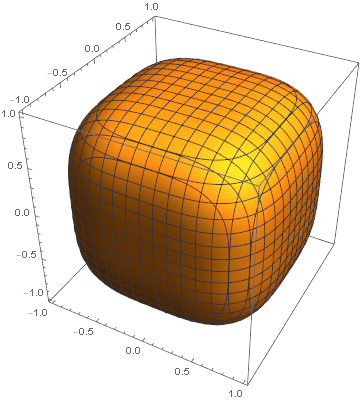

However, the plot of the sigma function, WeierstrassSigma in Mathematica, hardly looks line sine.

So in summary, logarithmic derivative takes Weierstrass sigma to Weierstrass zeta just as it takes sine to cotangent. Negative derivative takes Weierstrass zeta to Weierstrass ℘ just as it takes cotangent to negative cosecant squared.